Reinforcement Learning with the Snake Game

Published:

Reinforcement Learning (RL) is like training a pet: the agent (learner) explores its environment, takes actions, and gets rewards or penalties. Over time, it learns which actions lead to better rewards and avoids actions that result in penalties.

Why Use Reinforcement Learning?

RL is useful when:

- We don’t have labeled data but need to learn from experience.

- The goal is to maximize long-term rewards, not just immediate results.

- The environment is dynamic, and actions affect future states.

Think of teaching a child how to ride a bike: they try, fall (penalty), adjust, and eventually succeed (reward). RL follows the same concept!

Reinforcement Learning (RL) is a type of machine learning where an agent learns by interacting with an environment, receiving rewards or penalties based on its actions. The goal is to maximize total rewards over time.

Key Components:

- Agent: The learner (e.g., a snake in a game).

- Environment: The world it interacts with.

- State: The agent’s current situation.

- Action: What the agent can do.

- Reward: Feedback based on action taken.

2. The Snake Game as an RL Problem

Why Use the Snake Game?

The Snake Game is a great RL example because:

- It has clear rewards and penalties: Eating food is good, hitting walls or itself is bad.

- It requires planning: The snake must learn to reach food efficiently while avoiding obstacles.

- It’s simple but challenging: The game is easy to understand but requires strategic moves to maximize the score.

Mapping the Game to RL Components

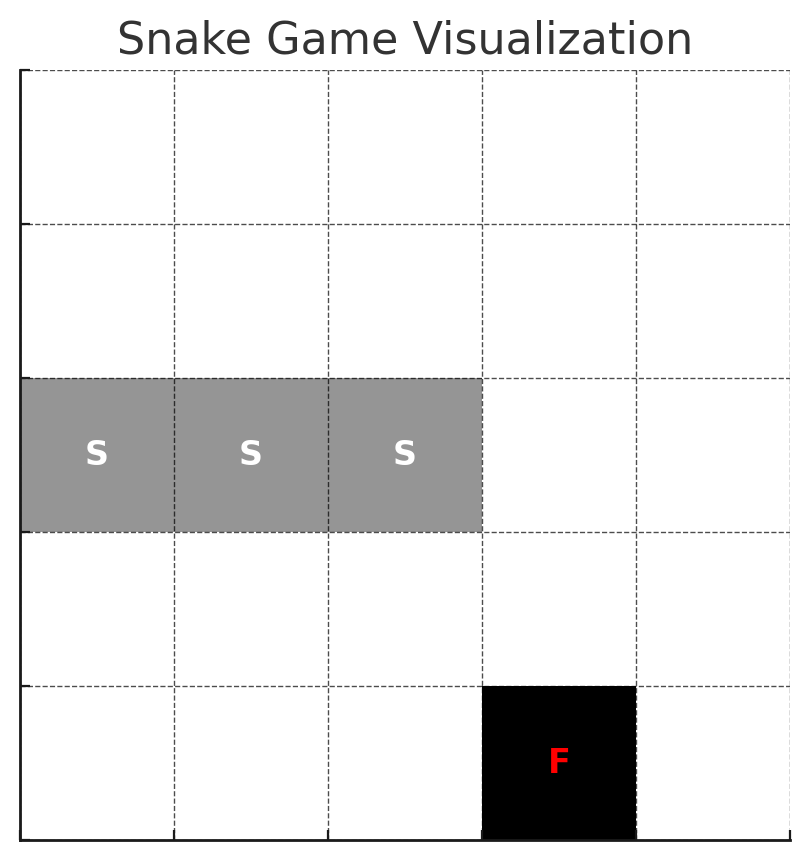

The classic Snake Game is a great way to understand RL: By using RL, the snake learns how to survive longer and score higher without being explicitly programmed with rules.

- Agent: The snake.

- Environment: The game grid.

- State: Snake’s position, food, and obstacles.

- Actions: Move up, down, left, or right.

- Rewards:

- +10 for eating food.

- -10 for hitting a wall or itself.

- -1 per step to encourage efficiency.

3. RL Concepts in the Snake Game

Policy:

The agent’s strategy for choosing actions.

- Simple policy: Always move toward the food.

- Learned policy: Uses RL to find the best actions over time.

Q-Learning:

A popular RL algorithm that estimates the Q-value for each state-action pair:

\(Q(s, a) = Q(s, a) + \alpha \left[ r + \gamma \max_{a'} Q(s', a') - Q(s, a) \right]\) Where:

- \(s\): Current state.

- \(a\): Action taken.

- \(r\): Reward received.

- \(s’\): Next state.

- \(\alpha\): Learning rate.

- \(\gamma\): Discount factor for future rewards.

4. Learn with Coding: Implementing RL in Snake

Step 1: Create the Snake Game

import random

class SnakeGame:

def __init__(self, width=10, height=10):

self.width, self.height = width, height

self.snake = [(width // 2, height // 2)]

self.food = self._place_food()

self.direction = (0, 1)

self.score = 0

def _place_food(self):

while True:

food = (random.randint(0, self.width - 1), random.randint(0, self.height - 1))

if food not in self.snake:

return food

def step(self, action):

directions = {"up": (-1, 0), "down": (1, 0), "left": (0, -1), "right": (0, 1)}

self.direction = directions.get(action, self.direction)

new_head = (self.snake[0][0] + self.direction[0], self.snake[0][1] + self.direction[1])

if new_head in self.snake or not (0 <= new_head[0] < self.height and 0 <= new_head[1] < self.width):

return -10, True # Game over

if new_head == self.food:

self.snake.insert(0, new_head)

self.food = self._place_food()

self.score += 10

return 10, False

self.snake.insert(0, new_head)

self.snake.pop()

return -1, False

def render(self):

grid = [[" " for _ in range(self.width)] for _ in range(self.height)]

for x, y in self.snake:

grid[x][y] = "S"

grid[self.food[0]][self.food[1]] = "F"

for row in grid:

print("|" + "|".join(row) + "|")

print(f"Score: {self.score}")

Step 2: Create the Q-Learning Agent

class QLearningAgent:

def __init__(self, actions, alpha=0.1, gamma=0.9, epsilon=0.1):

self.actions = actions

self.alpha = alpha

self.gamma = gamma

self.epsilon = epsilon

self.q_table = {}

def get_action(self, state):

if random.uniform(0, 1) < self.epsilon:

return random.choice(self.actions)

return self._best_action(state)

def _best_action(self, state):

q_values = [self.q_table.get((state, a), 0) for a in self.actions]

return self.actions[q_values.index(max(q_values))]

def update_q_table(self, state, action, reward, next_state):

old_value = self.q_table.get((state, action), 0)

next_max = max([self.q_table.get((next_state, a), 0) for a in self.actions])

self.q_table[(state, action)] = old_value + self.alpha * (reward + self.gamma * next_max - old_value)

Step 3: Train the Agent

game = SnakeGame()

agent = QLearningAgent(actions=["up", "down", "left", "right"])

for episode in range(100):

state = tuple(game.snake[0])

done = False

while not done:

action = agent.get_action(state)

reward, done = game.step(action)

next_state = tuple(game.snake[0])

agent.update_q_table(state, action, reward, next_state)

state = next_state

game.render()

print(f"Episode {episode + 1}, Score: {game.score}")

5. Key Takeaways

- RL is learning by interacting with an environment.

- Q-Learning helps agents make better decisions over time.

- Building from scratch strengthens understanding.

More detailed examples could check on Rebecca’s Pick-up Dropoff design using reinforcement learning.

🤖 Disclaimer: This post is inspired by Educative.io AI learning course, and generated with AI-assisted but reviewed and refined by Dr. Rebecca Li, blending AI efficiency with human expertise for a balanced perspective.